Weaponised AI isn't killer robots, it's just humans killing on autopilot

This post is migrated from the old Wordpress blog. Some things may be broken.

“You should not be afraid of AI. You should be afraid of the people building it.”

Jessica Matthews, founder of Uncharted Power

Tl;dr: The incorporation of AI into weapons needs to be banned in a way similar to how the international Chemical Weapons Convention prohibits the development, production, acquisition, stockpiling, retention, transfer or use of those weapons as an entire class. The problem would be in getting anyone to follow such policy during an era in which the notion of international law or a rules-based international order is on the downward slide.

The central gruesome feature of Israel’s current war on Gaza — ostensibly still referred to in much of the press as its ‘war on Hamas’ — has been the immense surge in the civilian deaths resulting from Israeli military operations. Casualty figures kept by Al Jazeera (as of 12 April) put the overall number to at least 33,634 Palestinians, the majority being non-combatants, and including 13,000 children and 8,400 women. More than 76,214 people have been injured, and 8,o00 are missing. These numbers will be out of date by the time I hit publish on this blog post. Oxfam had declared the daily death rate in Gaza to be higher than any other major 21st Century conflict, with (as of January) the “Israeli military killing 250 Palestinians per day.” It’s still early in the century, so there’s still a chance for some other war to beat that record. But Gaza is providing a window into what 21st century warfare looks like: automated decisions, and humans still in the role of what sci-fi had predicted would be the machines pulling the trigger. The future is here and it’s brutally stupid.

Last week a pair of articles exposed the algorithm behind the incredibly high death toll in Gaza. The first was ‘Lavender’: The AI machine directing Israel’s bombing spree in Gaza. This was a collaboration by two Israeli news outlets, +972 Magazine, and the Hebrew language news site Local Call. UK’s The Guardian — with advanced access to +972 and Local Call’s material — published ‘The machine did it coldly’: Israel used AI to identify 37,000 Hamas targets. If you’re going to just choose one, I suggest the +972/Local Call collaboration, it’s the full deal. Both expose how The IDF has used artificial intelligence systems to identify what it labels “Hamas operatives” and assigns target values that allows for higher tolerances for surrounding civilian casualties. This includes thousands of people killed inside their homes because a predictive algorithm suggested that there’s very likely an assessed target somewhere inside the building as well.

Already the news cycle has moved on. There has been little follow-up since these stories dropped. I think we should, though… stop. The occupation of Palestine has crossed over into automated, mechanised killing without the need for humans to do much second guessing. This blog post can wait, go read those articles and come back. I’ll still be here.

Finished? Okay, let’s dig in. Prior to “Operation Iron Swords” both the U.S. and Israeli intelligence had estimated membership of Hamas’s military wing to be around 25-30k, according to the Guardian piece. As both it and +972 & Local Call point out, The Lavender AI data set inflated that number to 37,000 suspected targets. “The result as the sources testified, is that thousands of Palestinians — most of them women and children or people who were not involved in the fighting — were wiped out by Israeli airstrikes, especially during the first weeks of the war, because of the AI program’s decisions,” writes Yuval Abraham in the +972/Local Call exposé.

Take a pause and a couple of breaths on that one. There are things worth absorbing here. I want to bring up the wider implications, but not the expense of the experiences faced by the Palestinian people in Gaza each day right now, and the guilt that is entirely the Israeli government’s own. Based on a dataset and algorithm with an alleged (large) 10% error rate, an AI has generated tens of thousands of assassination targets. The Israeli military has acted on these, incorporating the AI’s ranking for allowable collateral damage (aka: a lot of civilian deaths).

These are not mistakes. Mistakes are something else. There’s this re-occurring argument as soon as the Palestinian death toll starts to mount in the steep numbers: that these are regrettable but inevitable mistakes. Barry Posin, on the Foreign Policy site, offers the classic, nearly form-letter approach. It’s a sort of best hits compilation: “Urban warfare has always been brutal for civilians — and the war against Hamas was destined to be an extreme case.” What we learn from the Israeli military’s use of AI decision-making algorithms is that this is a choice with a tolerance dial. It’s not an accident, it’s a feature. Again, as a listicle…

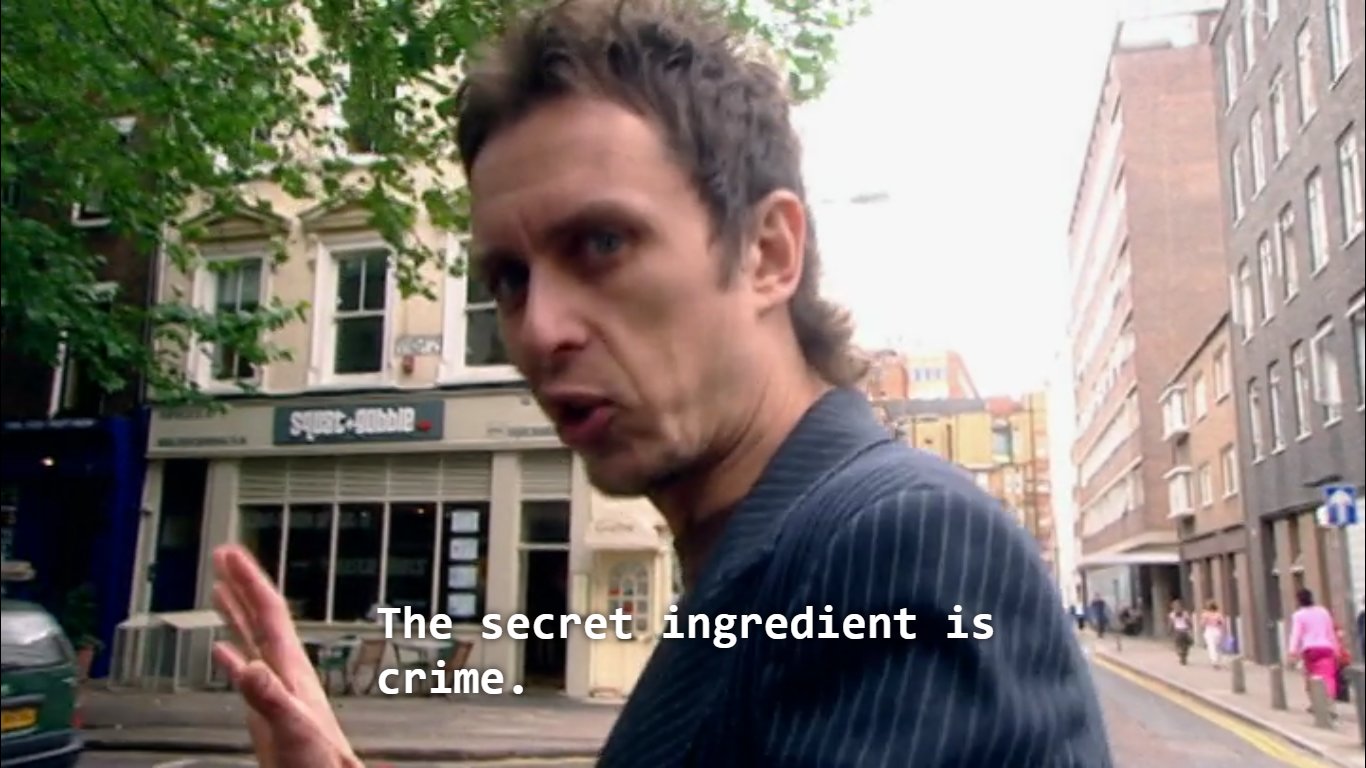

The ingredients:

- A loose method of identifying targets.

- tolerance for a lowly 90% accuracy rate.

- the willingness to bomb suspected targets inside their family homes.

- and instructions that allow for a wide berth of collateral damage.

The outcome: a huge number of those killed in Gaza have been its civilian population.

This isn’t an accident, it’s a functional specification. It’s code. It’s a recipe. It’s been signed off and deemed as acceptable by Israel’s military and political leadership. As one person posted over on Twitter replacement Bluesky, “I’m old enough to remember AI ethicists telling us these systems will take on the values and biases of their makers.”

That the Israeli military is using AI in its Gaza operations is not the latest news, this was covered last winter. The +972/Local Call investigation revealed two elements last week. The first is the depth of its technical reliance; The Lavender Program pulls from massive data sets that contain information on nearly all of Gaza’s 2.3 million population to generate a list of targets; This is paired with another previously unheard of automated system called “Where’s Daddy?” that pings when a target is entering their home, the system is built around this being the easier location to target. These programs join a previously reported AI system, creepily called “The Gospel,” which targets buildings and structures assessed to be used for Palestinian militant operations. The second revelation is how susceptible human beings are to slavishly following orders from machines to kill other humans.

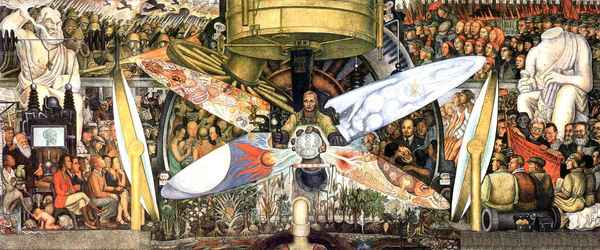

But it gets worse. It always gets worse. Consider how we’ve been trained to think about the future of conflict when AI starts getting involved (I can’t say “if/when” because it’s clearly happening in the world). I think It’s best summarised by that high-contrast, monochrome Black Mirror episode called Metalhead, in which weaponised, grunge versions of those Boston Dynamics quadrupeds chase Maxine Peake around for 41 minutes. But probably the Terminator franchise is what set most people’s expectations, with War Games being a true origin of the genre (some film nerd out there will correct me, watch this space). These and some other pop culture narratives have put the focus on autonomous machines going rogue, and that seems to be the threat model that the world still likes to mull when it comes to artificial intelligence that has bullets or bombs attached to it. The idea is that we somehow maintain control by keeping a human being in the middle. How quaint.

This view of the future existential threat brought about by a rogue AI has been perpetuated beyond sci-fi films. It’s an alarm several leaders in the commercial tech sector have been sounding. Back in 2017, Elon Musk, along with Alphabet’s (read: Google’s) Mustafa Suleyman, co-signed an open letter to the United Nations with 116 other founders or leaders of AI and robotics companies calling for a ban on “autonomous weapons.” In it they posited: “Once developed, lethal autonomous weapons will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend. These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways. … We do not have long to act. Once this Pandora’s box is opened, it will be hard to close.” Hindsight is a mother fucker. I mean, parts of this letter are spot on. Sure, ban the killer robots. Ban the shit out of them. But this letter was also wrong. It was short sighted. While very “fight the future,” it was missing the already arrived threats that come from mixing AI generated choices with weapon systems that still have humans at the trigger.

Elon and his mates were preceded a couple of years earlier by an alliance of human rights groups and concerned scientists who organised under the Campaign to Stop Killer Robots, which in 2015 had proposed a similar international ban on “on fully autonomous weapons” at a UN conference. Interestingly, the UK opposed such a ban. By the by, Stop Killer Robots continues to iterate and be a relevant voice in the field. Aside from having a really groovy website design, it’s got some decent research material and campaigning resources, and has been a vocal critic on Israel’s use of Lavender data processing and introducing ground robots for surveillance in Gaza. But I digress.

A Further Digression — Every article or piece of content that discusses autonomous weapons systems seems to require an obligatory still of the T-800 endoskeleton from one of the Terminator franchise films (sometimes someone will use an image of the ED-209 from the film RoboCop, but not nearly as often, even if it’s more relevant to the discourse). Thus far in this blog post I’ve resisted. The only Terminator content I consider to be canon has Linda Hamilton, and it’s in ‘Terminator 2: Judgement Day,’ that her Sarah Connor character drops this banger of a voiceover line that remains one of my favourite film quotes of all time:

“Watching John with the machine, it was suddenly so clear. The terminator would never stop. It would never leave him, and it would never hurt him, never shout at him, or get drunk and hit him, or say it was too busy to spend time with him. It would always be there. And it would die to protect him. Of all the would-be fathers who came and went over the years, this thing, this machine, was the only one who measured up. In an insane world, it was the sanest choice.”

Make of that what you will. Maybe this is what Elon is afraid of.

Fast forward to more recent history. Last October the UN Secretary General and the President of the International Committee of the Red Cross made a joint appeal for new international rules on autonomous weapon systems, specifically that “machines with the power and discretion to take lives without human involvement should be prohibited by international law.” Even more precisely, they called on “prohibiting autonomous weapon systems which function in such a way that their effects cannot be predicted. For example, allowing autonomous weapons to be controlled by machine learning algorithms.”

You can get the urgency. In 2021, for example, Georgetown University’s Center for Security and Emerging Technology released its research on how China’s People’s Liberation Army is researching and adopting artificial intelligence and autonomous weapon systems at a rapid pace. Elsewhere, the U.S., Russia, South Korea, and the European Union join China as the five world leaders in spending on development in weapons that can act on their own in one fashion or another.

That letter mentioned above went live just 2 days before the horrific Hamas-led attack of 7 October that led to the deaths of nearly 1,200 Israelis and the kidnapping of 240 others. The unprecedented attack changed overnight the entire nature of Israel’s decades-long occupation of Palestine and its methods to keep its people subjugated. Israel’s military “took a dramatically different approach,” Yuval Abraham reported in the +972/Local Call investigation. Any suspected or known participant of Hamas’ military wing would be targeted, regardless of their seniority or importance. The scale and speed demanded for the new policy couldn’t be carried out by human means. “The Israeli army figured it had to rely on automated software and artificial intelligence. The result, the sources testify, was that the role of human personnel in incriminating Palestinians as military operatives was pushed aside, and AI did most of the work instead.”

“We didn’t know who the junior operatives were, because Israel didn’t track them routinely [before the war]. They wanted to allow us to attack [the junior operatives] automatically. That’s the Holy Grail. Once you go automatic, target generation goes crazy.”

A military source for the +972 Magazine and Local Call investigation

Nothing in the letter would have prohibited the use of anything described in the +972 / Local Call revelations. Technically and operationally, these systems can’t be considered entirely autonomous. By 22 December last year, 152 countries voted (PDF) in favour of first-ever United Nations General Assembly resolution on “killer robots.” Nice one. In and of itself, it does nothing. Human Rights Watch urged the UN to move forward on an actual international treaty to ban and regulate autonomous weaponry. Great. It doesn’t solve the problem that now exists. It doesn’t go nearly far enough. And if brought about poorly, it could even legitimise these AI systems that are presently being employed in Gaza at huge costs to human life.

There’s a false premise in the idea that simply shoving a human somewhere in the middle of a bunch of AI systems will add a dash of morality to the automated killing workflow. With automated target orders, military personnel can increase their quotas, which seems to be the point here. It starts sounding like how Amazon Warehouse workers are managed via surveillance and data points. “The only question was, is it possible to attack the building in terms of collateral damage,” a source told +972 and Local Call. “Because we usually carried out the attacks with dumb bombs, and that meant literally destroying the whole house on top of its occupants. But even if an attack is averted, you don’t care — you immediately move on to the next target. Because of the system, the targets never end. You have another 36,000 waiting.” Another source reported that personnel often served “only as a ‘rubber stamp’ for the machine’s decisions, adding that, normally, they would personally devote only about ’20 seconds’ to each target before authorising a bombing — just to make sure the Lavender-marked target is male.” And those killed around them can still just be anyone.

An Interlude — Every known indication screams that the level of AI currently being developed anywhere isn’t that trustworthy. That doesn’t mean there aren’t valid use cases, just that there should be a sceptical-first approach to anything that’s claimed to be an AI product. There’s a high likelihood its capabilities are being oversold or that it’s not entirely doing what it says on the tin. Or it’s a con. Consider how the Washington State Lottery had to pull down an AI gimmick page on its website because it started generating porn. Or the fake law firm that sent out AI generated legal threats using AI generated lawyers. Or how Amazon is quiet quitting its checkout-less grocery stores that allegedly ran on AI detecting what customers had put in their baskets, but was in fact just thousands of people in India looking at video monitors and trying to very quickly label things. And no list could be complete without a mention of how Elon has continued to make a mess of Twitter, which now has a new product called Grok, which seems to generate misinformation and disseminate it to millions of people to start a panic. (If you’re a journalist and still using x.com as a distribution channel, I really don’t get the point of you.) And one more: Late last year Google’s AI research wing DeepMind announced its AI tool called GNoME had found 2.2 million previously unknown kinds of crystals, leading to speculation that some could be the future for powering technology. Nice. It appears GNoME may have just been hallucinating them. Perhaps GNoME is going to move to a coastal town and open a hippy crystal shop near the beach.

These kinds of revelations are presently a daily phenomenon. But I’m not against AI things, quite the opposite, I’m often an early adopter and then rapid abandoner whenever the latest app or generative AI toy drops. And more seriously, there are a lot of great life-improving uses for it. I’d argue that AI is in some cases necessary to deal with the world as it now is. It can be incredibly effective in antivirus and end-point security software. It’s proven useful for assisting the detection of some kinds of cancer and other disease, and is handy for analysing large sets of climate data, which is becoming ever more important. I enjoy walking through a forest with Cornell Lab’s Merlin App telling me which birds I’m hearing. The app isn’t going to put that long-tailed tit on a hit list for a bombing raid, though.

On another occasion in March of last year, Musk and a thousand of his friends signed another open letter, this one calling for a weirdly arbitrary 6-month moratorium on development of AI systems that could be “more powerful than GPT-4.” The letter goes into more details about some proposed policy and regulation, but is aimed at addressing this problem: “Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones?” Among its signatories were no shortage of heavyweights. It always amazes me that news stories give Musk the headline, being that he is currently running an information channel awash in disinformation (some created by his own AI thing, Grok). Notably absent was OpenAI’s Sam Altman, already likely working on models more powerful than GPT-4.

In the other corner were the AI Ethicists. Timnit Gebru, Emily M. Bender, and Angelina McMillan-Major were all fired from Google for their paper that was critical of the hype verses reality of generative AI models, “On the Dangers of Stochastic Parrots.” Adding that to their CVs they formed the DAIR Institute to carry out independent research, without the taint of corporate backing, on the impacts and harms of AI. In their response, our Stochastic Parrots trio lay out what the first letter seemed to be pointedly avoiding: “transparency, accountability and preventing exploitative labor practices.” Not many in Elon’s group of Silicon Valley tech startup types seem terribly keen on open sourcing their algorithms, data collection and usage methods, or the other innards that make generative AI products pretend to create things. Gebru and company argue that the 6-month AI hiatus appeal is both over-hyping hypothetical risks (boo-scary AI will get out of control and take over) while ignoring actual harms currently at play — such as worker exploitation, data theft, automating existing biases and racism, and further concentrating power into fewer hands. They argue that in effect the first letter’s points are aimed at closing off access to AI research to all but the richest corporations.

What a simple, innocent time 2023 was. So long ago. Both letters make salient points about the risks posed, but they’re also not dark enough. They didn’t really tease out the possible dystopia we could face. They all lacked the zest, creativity and outside-the-box thinking of Yossi Sariel.

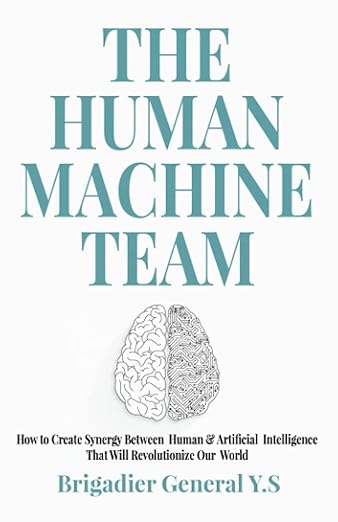

Abraham writes at the start of the +972/Local Call article, “in 2021, a book titled ‘The Human-Machine Team: How to Create Synergy Between Human and Artificial Intelligence That Will Revolutionize Our World‘ was released in English under the pen name ‘Brigadier General Y.S.’ In it, the author — a man who we confirmed to be the current commander of the elite Israeli intelligence unit 8200 — makes the case for designing a special machine that could rapidly process massive amounts of data to generate thousands of potential ‘targets; for military strikes in the heat of a war. Such technology, he writes, would resolve what he described as a ‘human bottleneck for both locating the new targets and decision-making to approve the targets.’”

You can find the book on Amazon. As it turns out so can OSINT researchers. Ego’s a heckuva drug, and it’s hard to be a thought leader when your biggest work is always classified. Yossi outed himself as the previously anonymised head of Israel’s Unit 8200, and the architect behind its military’s use of AI to select people to assassinate in Gaza by publishing an electronic version of the book on Amazon that included a gmail address that has been found to have been used by accounts directly connected with Yossi elsewhere. Staying anonymous on the internet is hard. In this situation it’s okay, though, because the ICC needs a name in order to prosecute someone for genocide.

I have a dread fascination to read this book but not enough to pay a war criminal money for it. Maybe there’s a cracked PDF on The Pirate Bay somewhere. It is interesting to see how the author advertises it on Amazon. He — or his literary agent — writes that “a machine can use big data to generate information better than humans. However, a machine can’t understand context, doesn’t have feelings or ethics, and can’t think ‘out of the box’. Therefore, rather than prioritize between humans and machines, we should create The Human-Machine Team, which will combine human intelligence and artificial intelligence, creating a ‘super cognition’.” Amazing. Very much not “autonomous machines of death.” It’s Robocop, not the ED-209. The blurb continues enthusiastically to herald in a new world where “the combination between human and artificial intelligence can solve national security challenges and threats, lead to victory in war, and be a growth engine for humankind.”

On the one hand, Yossi seems optimistic, with the utopia volume cranked up to 11, matching parts of the 6-month pause letter by Elon and company where it claims that “humanity can enjoy a flourishing future with AI” and “an ‘AI summer’ in which we reap the rewards, engineer these systems for the clear benefit of all and give society a chance to adapt.” But Yossi’s stated vision of the future doesn’t sound much like the reality he designed, as described by one intelligence officer in the +972/Local Call investigation: “There was a completely permissive policy regarding the casualties of [bombing] operations — so permissive that in my opinion it had an element of revenge … The core of this was the assassinations of senior [Hamas and PIJ commanders] for whom they were willing to kill hundreds of civilians.”

And so, here’s my point… and I do have one: Using artificial intelligence in weapon systems needs to be banned on a far more extensive level than has already been suggested. It needs to be banned in the way that chemical Weapons are banned around the world. This has been brought up a few times (here’s one, and here’s another, there are more) but there’s always a loophole. They inevitably refer to lethal autonomous weapons, which is incredibly scope limiting given the present reality. We need a ban that takes AI out of the process of identification, ranking, tracking, everything. It needs to be sweeping, globally applicable and have highly technical inspection and reporting processes of the kind that Aaron Maté just wouldn’t understand.

What the +972/Local Call investigation highlights is that humans tend to to believe the technology. The goal then becomes expediency, efficiency, and racking up higher and higher numbers. Humans aren’t a check or a balance. They serve as little more than a cog in a numb, bureaucratic process of delivering genocide with cost and time saving efficiencies.

“There’s something about the statistical approach that sets you to a certain norm and standard. There has been an illogical amount of [bombings] in this operation. This is unparalleled, in my memory. And I have much more trust in a statistical mechanism than a soldier who lost a friend two days ago. Everyone there, including me, lost people on October 7. The machine did it coldly. And that made it easier.”

Israeli military source in the +972/Local Call investigation

Perhaps coming the closest to what’s needed (but still not close enough) is Toby Walsh, chief scientist at University of New South Wales AI Institute. In the university’s Engineering the Future’ podcast series he argues that “lethal autonomous weapons need to be added to the UN’s Convention on Certain Conventional Weapons, the open-ended treaty regulating new forms of weaponry.”

My position is that even technically non-autonomous weapons systems reach a de facto level of automation because they alter the role of human beings in the killing supply chain. They essentially hack the operator’s free will, and remove responsibility and accountability. It feels like there is no commanding officer for whom the buck stops when orders to wipe out a family or an entire neighbourhood are brought under legal scrutiny, like at The Hague. Only there is.

“Law is about holding people accountable” says Walsh. “But you notice I said the word ‘people’. Only people are held accountable. You can’t hold machines accountable.” Yes and no. You can’t prosecute a computer, but you arguably can drag to court the designers of the genocidal systems that those computers run. The Nuremberg Trials were the prime example of holding the architects of systemised atrocities to account. In the case of Gaza, there are already named parties. Yossi Sariel is certainly an architect of a systemic process to deliver what the ICJ has already declared as “acts [that] could amount to genocide.” The use of systems such as ‘Lavender’, ‘Where’s Daddy’ and ‘The Gospel’ are technical specifications to commit a number of war crimes. This isn’t dual-use technology that was exploited by some bad actors. It was created expressly for what is now happening in Gaza. This is a system doing what it was made for. It’s as if Unit 8200 was tasked to make an LLM and just fed it Article 8 of the Rome Statute of the International Criminal Court (pdf) for programming output.

Walsh points out that chemical and biological weapons have been banned since 1925, and that AI-powered weapons could follow suit. Yes, it could. But it took several decades for most countries to commit to not using chemical weapons, and that commitment hasn’t been that strong for some. The pessimist in me is fairly certain a weaponised AI ban would also be ignored or side-stepped in all kinds of ways.

If the cyber weapons industry has anything in common with the AI industry, it seems to be a consensus that laws are meant to be severely bent if not broken. The NYTimes recently published an investigation showing how companies like Meta, Google and OpenAI actively and knowingly worked on methods to ingest more content into their AI training data that may have violated the copyrights of untold thousands of content creators. These systems are closed and themselves proprietary. AI companies hoovering up other people’s creations for their own profit has been a heist in broad daylight, brazen enough to get a bill introduced in Congress just this week that aims to “force artificial intelligence companies to reveal the copyrighted material they use to make their generative AI models.”

The proliferation and global sale of cyber weapons also falls in legal grey areas where export controls are still being discussed more than implemented. The innovation in this area is moving far faster than efforts to control where and how they’re used and who gets to use them against whom. The merger of AI and offensive technology is natural. More companies that were originally technology focused can now be part of the military industrial complex in exciting new ways. No one wants to leave money on the table.

You may recall Project Maven from the headlines a few years ago. It’s the Pentagon’s image recognition technology, used for facial recognition in surveillance and other wondrous innovations to bring AI onto the battlefield. Back around 2019 employees at Google and Microsoft made a lot of noise about their companies working on it, signing petitions saying they didn’t agree to have their efforts become part of weapon systems. Google left the project but the more ethically flexible Palantir quickly stepped in and the project continued. It’s still there, in an increasing number of initiatives. It’s no longer called “Maven” but it’s appearing in even more budget lines aimed at bringing more algorithms and AI systems into combat faster. The blending of the tech sector and the military without any hard guard rails or transparency makes it increasingly more difficult for engineers, software developers and maintainers of technology to know where their innovations may end up. There are fewer ways to opt out without throwing the devices into the sea and retraining as a shepherd or the like.

“Which BigTech clouds are the “Lavender” & “Where’s Daddy?” AI systems running on? What APIs are they using? Which libraries are they calling? … What work did my former colleagues, did I, did you contribute to that may now be enabling this automated slaughter?”

Meredith Whittaker, president of the Signal Foundation

At the heart of weaponised AI is the data that makes it work, and that’s derived by the surveillance state. This is not a secret. A West Point Modern War Institute article on Project Maven states that by 2017 enough surveillance hade been amassed to equal “325,000 feature films,” or about 700,000 hours of footage.

As the +972 / Local Call investigation notes, Israeli military intelligence has collected data on “most of the 2.3 million residents of the Gaza Strip through a system of mass surveillance.” This isn’t new information. It’s been known for years, and has been reported on, that Israel has amassed vast databases of information on nearly every Palestinian it can in the occupied territories, including their biographical data, addresses, phone numbers, political affiliations, and facial recognition files. Unit 8200 boasts it can listen to every phone call in the West Bank and Gaza, and can track their locations. If there’s a data point, it’s being collected and imbibed into algorithms that rank targets and output kill lists.

Surveillance data storage and processing is huge business. Similar to the Project Maven situation, in 2021 a number of Google and Amazon employees published an open call to their companies to pull out of Project Nimbus, “a $1.2bn contract to provide cloud services for the Israeli military and government” that “allows for further surveillance of and unlawful data collection on Palestinians, and facilitates expansion of Israel’s illegal settlements on Palestinian land.” Conflict between workers and their employers over this one are still continuing, neither Google or Amazon seems to have changed course. No one likes to leave money on the table. The employees are correct, though. Particularly with what’s happened in Gaza over the last six months, Google and Amazon could find themselves in the position somewhat akin to IBM’s in 1933. That isn’t a comparison for dramatic effect. To avoid complicity in war crimes in Gaza, Canada, Spain, and Belgium have stopped shipping weapons to Israel. A Dutch court ruling has banned exports of equipment for F-35 fighter jets. UK arms sales to Israel are facing some legal challenges, ineffective as they may be. And Germany faces accusations of complicity of genocide at the ICJ for supplying weapons used in Gaza.

Meanwhile, Time Magazine this week exposed that Google has actively deepened its relationship with Israel’s military amid its attack on Gaza. “The Israeli Ministry of Defense, according to the document, has its own ‘landing zone’ into Google Cloud — a secure entry point to Google-provided computing infrastructure, which would allow the ministry to store and process data, and access AI services.” Signal’s Meredith Whittaker said over on Bluesky: “There is almost no way the large cloud companies providing massive compute services AI surveillance & data processing APIs aren’t implicated in the system of ‘ad targeting for death’ being used to facilitate mass murder in Gaza.” It’s well within the realm of possibility that tech companies supporting development or infrastructure for AI systems that enable war crimes by design could face similar allegations. It will be interesting when it happens.

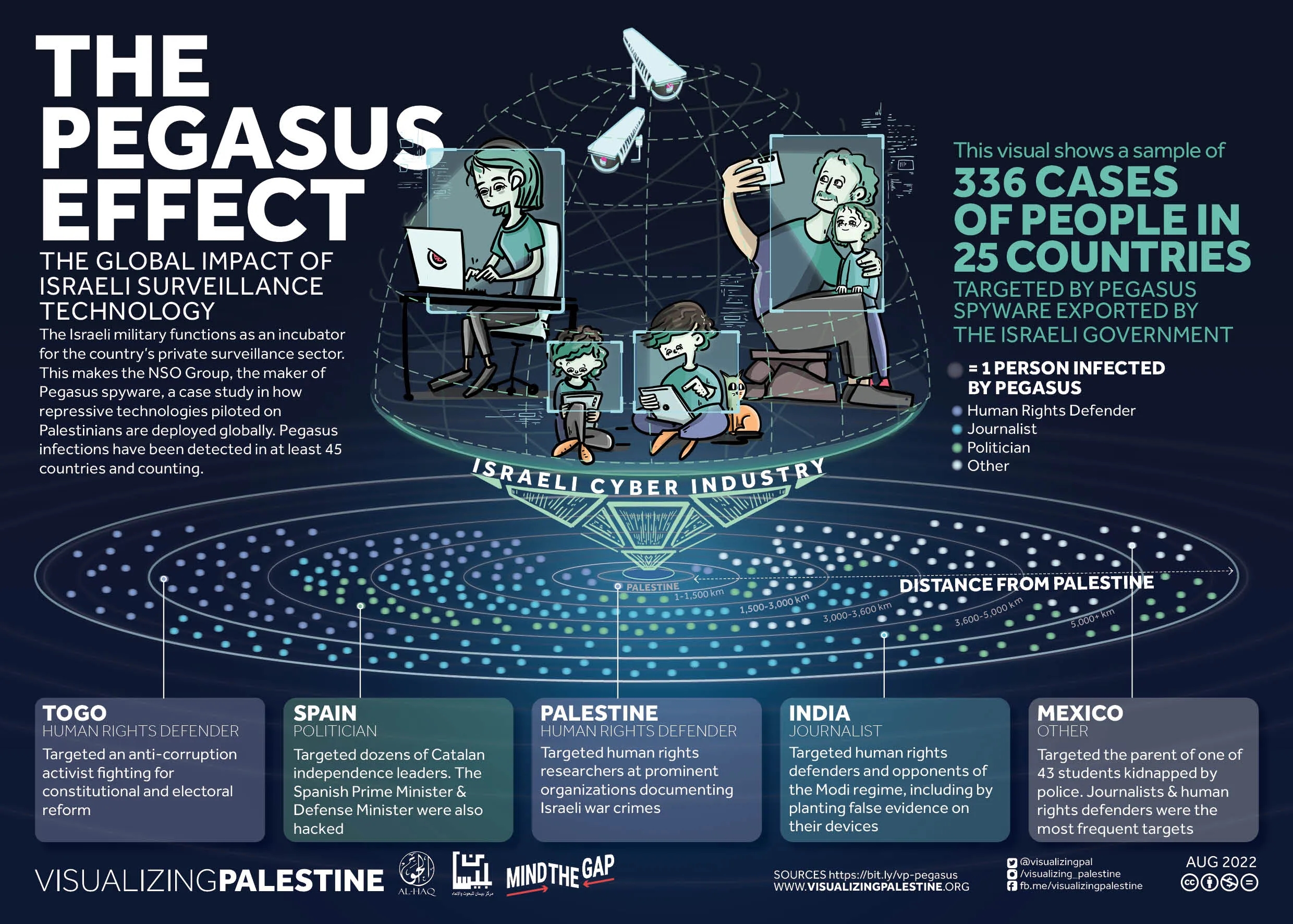

For the Tor Blog, Esra’a Al Shafei and Falastine Saleh write about “how Israeli spyware and surveillance companies navigate global scrutiny by rebranding and establishing offices worldwide, all while a network of venture capital firms facilitate their operations and help them avoid much needed accountability.” Surveillance isn’t just for military intelligence, it’s a money spinner worth many billions of shekels. A wide range of governments including Mexico, India, Saudi Arabia, Greece, Madagascar, the U.S., etc. are purchasing spyware systems from Israeli firms that were developed using an occupied and captive population as guinea pigs. Invariably, these aren’t just used against criminals or terrorists, but have been deployed by various regimes to target political opposition groups, protesters, activists, and journalists. Since it was originally uncovered, NSO Group’s Pegasus Spyware has been discovered on hundreds of journalists’ mobile phones in countries around the world, too many to list here. Another Israeli spyware company, Intellexa — whose technology has been used by authoritarian and anti-democratic regimes around the world — was banned last month from doing business in the U.S. after its Predator spyware had been found to have been used against two sitting members of Congress.

These companies are nearly all spun off of Unit 8200, Israel’s answer to the NSA or GCHQ, and the main goal is commercial exports, not national security. The group Visualising Palestine describes Unit 8200 “as an incubator for private Israeli cyber companies and tech entrepreneurs, with its veterans founding over 1,000 companies.” The “digital intelligence company” Cellebrite, for example, has sold its technology to Indonesian authorities for use against LGBTQ+ communities, and in Belarus and Hong Kong, where it’s been used against pro-democracy activists. Rest of World reports that “In Russia, Cellebrite has been used at least 26,000 times by Putin’s pet spy unit, the Investigative Committee, which has targeted opposition leader Alexey Navalny and hundreds of human-rights groups.” The United States’ ally seems to have little problem selling U.S. adversaries advanced tools for repression.

In the other corner from Yossi Sariel’s ‘The Human-Machine Team’ is a book by Antony Loewenstein, ‘The Palestine Laboratory: How Israel Exports the Technology of Occupation Around the World. In this one, Loewenstein writes about how Israel has succeeded in — and has become dependent on — commercialising its military occupation. In it, he looks at the entire range of innovations Israel has achieved in methods of controlling an entire population and how it has successfully marketed this to the world. This includes border control, emerging from its separation wall, which Haaretz described as “a complex engineering and technological system: the only one of its kind in the world.” Magal Security Systems, which develops the barriers that seeks to contain and monitor the Palestinian population of West Bank and Gaza, markets its innovations around the globe. In 2017 it sought to construct the wall promised by Donald Trump between the US and Mexico, complete with its “Fiber Patrol” surveillance technology. Switching to his marketing role, Israeli Prime Minister Netanyahu tweeted, “President Trump is right. I built a wall along Israel’s southern border. It stopped all illegal immigration. Great success. Great idea.”

The current siege on Gaza, with its stated aim of “rooting out Hamas” and its death toll having passed the 30,000 mark, is also a showroom of sorts for Israel’s military and surveillance technology industry. In January Shalev Hulio, the former CEO of NSO Group, used Gaza as a backdrop to promote his new startup. Dream Security had apparently raised $33 million in venture capital fundraising. At the Singapore Airshow, the Gaza siege turned into a selling point; a live example of what was possible with Israeli weapons systems.

Let’s briefly return to Charlie Brooker’s Black Mirror episode “Metalhead.” While it’s been compared to a stripped down version of “The Terminator” and is widely seen as a kind of cautionary tale about the threats of autonomous AI weapons, that wasn’t how the original storyline was supposed to go. Brooker’s original script had a scene in which it was revealed that there was a WFH operator controlling the killer robot dog. “There was a bit I liked where he leaves the [control unit] while the robot is watching her while she’s up in the tree and he goes and gives his kids a bath,” Brooker had said in an interview. “But it felt a bit weird and too on the nose. It kind of felt superfluous. We deliberately pared it back and did a very simple story.” While the show is perfect as is, I think this other version would have been far closer to the existing threat we currently face. People distanced from the killing they’re carrying out, treating it like a set of technical processes in their day job, and tending to feel like they have less skin in the game.

One way to create distance is to be literal about it: proximity. Consider drone warfare. The Bureau of Investigative Journalism covered American drone warfare between 2010 and 2020. The U.S. is far from the only country to rely on drones, but it seems to do it a lot. President Obama “embraced the US drone programme, overseeing more strikes in his first year than Bush carried out during his entire presidency,” TBIJ found. Obama also extended the use of drone strikes to areas outside of where the U.S. was involved in active conflict. When Trump moved into the White House, he massively increased the use of drone warfare and decreased Obama era rules on requirements for carrying out attacks, and on reporting casualties. While it’s hard to get precise figures — mostly due to the Trump era policies — all told, civilian deaths over the period from drone attacks ran between 7.27% and 15.47%. There are cost and time savings to drone warfare, but also an assumed psychological aspect.

In his book ‘Asymmetric Killing,’ Neil Renic, a lecturer in peace and conflict studies, writes that “UAV-exclusive violence marks a fundamental shift in the nature of hostilities, from an adversarial contestation to something more closely approximating judicial sanction. … One outcome of this shift has been the dehumanization of those targeted by the United States.” Drone warfare is rapidly increasing, used by Russia, Iran, China, Azerbaijan, etc. “When viewing potential targets from drone-like perspectives, people become morally disengaged,” writes Jordan Schoenherr, an assistant professor in psychology. “By turning people into statistics and dehumanizing them, we further dull our moral sense.” That seems to track with what the military sources had revealed to +972 and Local Call. “We took out thousands of people, ” one source said. “We didn’t go through them one by one — we put everything into automated systems, and as soon as one of [the marked individuals] was at home, he immediately became a target. We bombed him and his house.”

The fear of autonomous weapons systems isn’t hype or even far-fetched. Fully and semi-autonomous slaughterbots are here now. But this is paradoxically a more comforting fear than the scalable and common reality that presently exists. We prefer the idea of the killer robot; it’s unthinking, alien and ultimately something people could choose not to build. The focus on banning autonomous weapons that have no human intervention also shows how much we want to believe that humans are somehow the safety valve. Toss in all the algorithms to select, track, and kill someone you like, but if we have a person in the middle then somehow morality mysteriously remains in play? This runs counter to everything we already know. Humans are easily re-programmable. The Stanford Prison Experiment in 1971 was a classic case of giving one group authority over another, fostering an in-group bias against that other group, and tossing in some impunity to do whatever they felt was needed. The 1963 Milgram Shock Experiment was an experiment that showed how far tests subjects would to (anonymously) harm other people because they were instructed to by an authority figure. They got up to wild things in the 1960s and ’70s, but it was nothing compared to the our current age, when we have people who are “just following orders” dispensed by machine with the sole job to keep generating kill orders and are running on a technology that is known to hallucinate.

Many people suggest that if we enable AI to make these kinds of targeting decisions, then there’s no one to hold to account. This is wrong. You just prosecute the people who made them and the people who paid them to make them. AI is people. Having these systems doesn’t somehow now mean that it’s machines that are doing the killing. It’s still just people doing it, only on autopilot. When someone commands their dog to attack you, you sue the dog owner, not the dog. Even if it’s a robot dog.