When the machine programs you

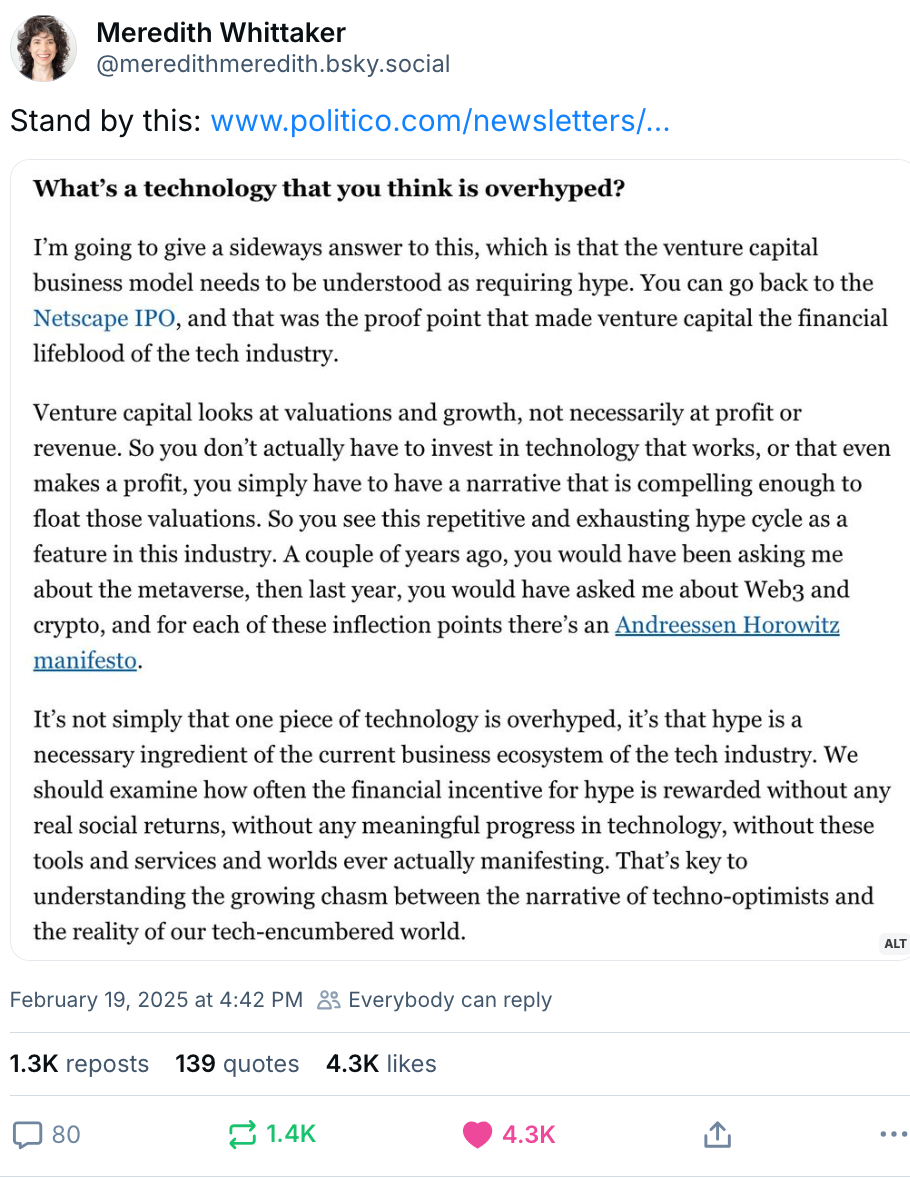

We do not have a technology problem; we have a venture capitalism problem.

This week's post is a continuation of our previous big generative AI thoughts dump. Last time we looked at what people believe the machine is doing. Now let's check out what the machine is actually doing to us.

It's important to get a grasp on this. What they sell as "AI" is getting chucked into everything at a rapid clip, and it's happening across every sector. In the U.S. Elon’s doge project is rushing out a custom generative chatbot for the US General Services Administration, “GSAi,” as part of the Trump regime's plan to replace as many people supporting services in the Federal government with automated-response garbage. Last month in UK, the current government put its bet on going all-in on investing in artificial intelligence:

"Backing AI to the hilt can also lead to more money in the pockets of working people. The IMF estimates that – if AI is fully embraced – it can boost productivity by as much as 1.5 percentage points a year. If fully realised, these gains could be worth up to an average £47 billion to the UK each year over a decade."

I remember a promise on the side of a Brexit promoting bus sounding about as plausible as that. Putting aside the billions worth of whatever currency you want to convert to in costs to mitigate the increased pollution from the data centres needed to run all of this, the race is on. UK's technology secretary announced that the threat from some other mysteriously unnamed non-western power (China) — the Guardian cites concerns around DeepSeek of all things, an example of energy efficiency and little else — meant that the "artificial intelligence race must be led by 'western, liberal, democratic. countries,' and that it would be infused into every part of the economic activity, in society, national security and defence. And on it goes. He said this we needed so “we can defend, and keep people safe."

DeepSeek is a chatbot. It's chief innovation was in frightening investors of several bloated Silicon Valley creations. Hundreds of billions are being spent on developing U.S. bots, and this one was booted up on $5.6 million. Scandal! Who let the poors in here!? It does the job more or less, yet it's doing so at far less expense in terms of infrastructure and on lower powered processors. It's still as crooked as the rest of them, based on pilfered content, censored from addressing various topics, and often produces incorrect results. But the main issue is that the wrong crooks may pull ahead. The chatbot wars will be the dumbest wars.

There are worse uses of large-model machine learning technologies, and very little in the way of agreements or treaties to get out ahead of them. Representatives of 60 countries attending that AI global summit in Paris signed a declaration aimed at ensuring AI accessibility, that development is transparent, safe, secure and trustworthy, and that it should be made "sustainable for people and the planet." So, wooly and ultimately non-binding, noncommittal stuff. Sort of like a COP statement for tech. Still, the U.S. and its pet the UK refused to sign it. Which means they want to stuff it in everything without having to talk about it.

The industry tasked with keeping the rest of us informed on these things is also getting mobbed up in it. In January, it was leaked to the New York Times that the Washington Post's chief strategy officer planned to turn the paper into “an A.I.-fueled platform for news.” This month it was leaked to Semafor that NYT newsroom staff were told they'd be given access to platforms that would "eventually write social copy, SEO headlines, and some code," and that they could use it to develop "web products and editorial ideas."

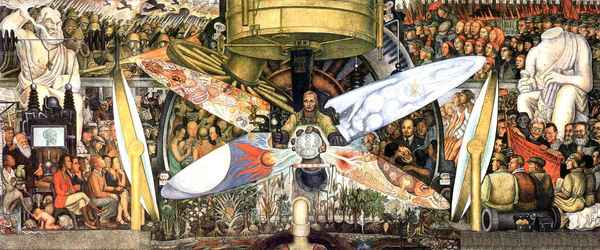

We do not have a technology problem. It sits there as a value-neutral suite of tools waiting to be used or misused. Like a hammer that can be used to make a door frame or commit a murder. We have a venture capitalism problem. It wants the hammer to do everything.

The problem with trying to promote a tool to do every kind of job is that it's going to do many of them badly. Or maybe it will look good from one angle but be incredibly damaging from every other vantage point. From an investor standpoint it works if they're making merry bushels of cash. For the rest of us it's an increasingly depersonalised world of treacherous slop that's obfuscating truth, increasing social isolation, killing the environment and undermining every creative field in human existence. It also doesn't like puppies. I know this.

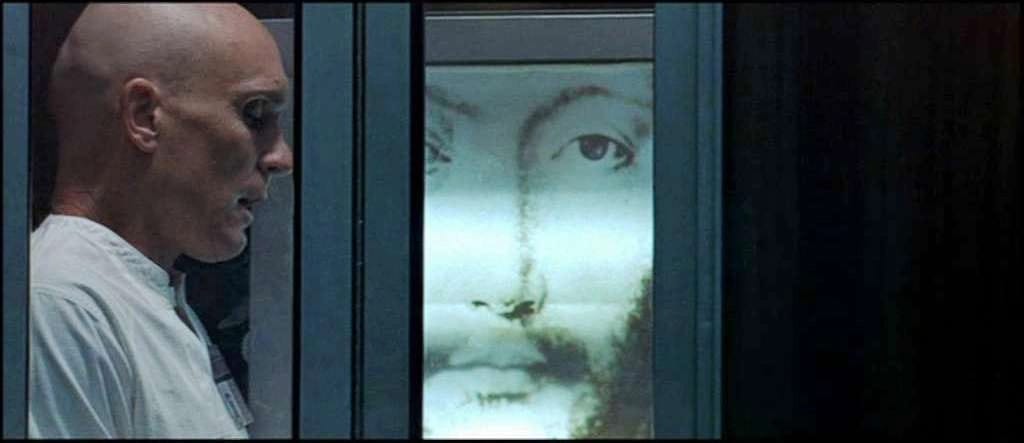

We are in need of a new movement of Luddites. "The always misunderstood Luddites," writes tech journalist and author Brian Merchant, "who fought back, not against technology, but against the titans who used technology to exploit ordinary people—against the 'machinery hurtful to commonality'—are more relevant than ever." This is about tech literacy, not opposition. The worst use case for what we're all calling generative AI is to feed into the anthropomorphism trap, and design bots feel ever more human. We don't need to create these with bots, we already know how to make humans. There are 8+ billion of us. What we're doing now, is messing with what that means. There are several efforts to create chatbots of the deceased, so people can continue talking with fabricated versions of them. Replika markets itself as a solution for loneliness to leverage its users insecurity and exploit it. The company was an early pioneer in the market of emotional corporate capture using anthropomorphised technology. These things muddle people's understanding of what they're doing when they engage with software.

The more you know about a tool, the more likely it stays in the toolbox longer until it has a use case. The rapid over-abundance of reliance on generative bots in just the last few years is a sign of exploitation on the part of the technology industry and a lack of understanding on the part of end users. Research published in January in the Journal of Marketing found that people who knew less about what AI is or how it works tended to adopt the technology more readily. "We call this difference in adoption propensity the 'lower literacy-higher receptivity' link," the research authors wrote in The Conversation. "Our studies show this lower literacy-higher receptivity link is strongest for using AI tools in areas people associate with human traits, like providing emotional support or counselling. When it comes to tasks that don’t evoke the same sense of human-like qualities – such as analysing test results – the pattern flips. People with higher AI literacy are more receptive to these uses because they focus on AI’s efficiency, rather than any 'magical' qualities."

The best use cases for these tools (and there are uses) don't involve talking to humans or performing tasks people can already do for themselves. The most marketable use cases are the flip side of that. They're also among the most destructive. A BBC study of the most widely used bot assistants — ChatGPT, Copilot, Gemini, and Perplexity — found that over half of their total output contained flaws, ranging from factual mistakes and misleading information, fake sources, or other errors rendering the information basically wrong.

Now let's combine that lack of understanding of what the tools do or how they work and poor results generated by them with another ingredient in our AI shit sandwich: The more people use these tools as shortcuts, the worse they get at critical thinking. Researchers with Microsoft and Carnegie Mellon University published their study this month (PDF) showing that more people used AI for various tasks, the more their cognitive abilities deteriorated. Research participants "found that the more humans lean on AI tools to complete their tasks, the less critical thinking they do, making it more difficult to call upon the skills when they are needed." This may seem obvious, but when you outsource any task, you're ability to do it will degrade. And yet we're being asked to do this more frequently via these pretend AI tools. “[A] key irony of automation is that by mechanising routine tasks and leaving exception-handling to the human user, you deprive the user of the routine opportunities to practice their judgement and strengthen their cognitive musculature, leaving them atrophied and unprepared when the exceptions do arise,” the researchers wrote.

There are more than enough Star Trek episodes across the franchise covering how bad things can get when we let the super computer take over the planet, we don't need to get into that here. At the shallow end, consider when ChatGTP went down for a few brief hours last month and one user posted on X: "“chatgpt down in the middle of the workday i’m about to get fired pray for me.” It was funny in a kind of "okay, ha ha give me my make-do machine back, now it's getting serious" kind of way. Other complaints on the day included people having to suddenly write their own code or finish their school essays themselves. On the worse end of things, if people don't know who made the tools or what they're designed to do, or how to interpret the output, that can lead to all kinds of nastiness.

Almost predicting our current situation, in which Trump has canceled a Biden executive order on AI safeguards, a student at Stony Brook University way back in 2023 wrote a chilling OpEd in the student journal suggesting "there needs to be a shift in concern to how AI may be used to spread potentially fascist political agendas through social media platforms. Unregulated AI algorithms could spread misinformation and insight extremism that suits one political identity." In 2024 MEMRI published a report on how neo-nazis and white supremacists became early adoptors in leveraging LLM chatbots across social channels to do everything from translate speeches by Hitler, Goebbels, or Mussolini, manipulate video clips to change content or automate attacks. "The report found that AI-generated content is now a mainstay of extremists’ output," Wired reported. "They are developing their own extremist-infused AI models, and are already experimenting with novel ways to leverage the technology, including producing blueprints for 3D weapons and recipes for making bombs." Under America's new regime, it has a free hand.

The threat is not necessarily in what the bot is programmed to do. It's how the bot gets used to re-program the rest of us.